Yes we are still here!

It has been many seasons since I last posted. A lot has changed over the years. Old stories end. New stories begin. As they say, "time changes everything". Priorities change.But as things change, there are still things that remain constant, remain unchanged.

Fatdog is one of them.

Fatdog is still alive. Still kicking. Still actively maintained. By the same Fatdog team. Though we don't announce every single changes that we make, updates still trickle to Fatdog package repository. Eventually, when the time comes, there will be another release.

In the meanwhile, enjoy.

Comments - Edit - Delete

Who fact-checks the fact-checkers?

In the moment of confusion, who is the arbiter of truth?Who appoints the arbiter of truth?

Or shall we speak in one voice with Pilate, who asked: "What is truth?" (John 18:38).

Comments - Edit - Delete

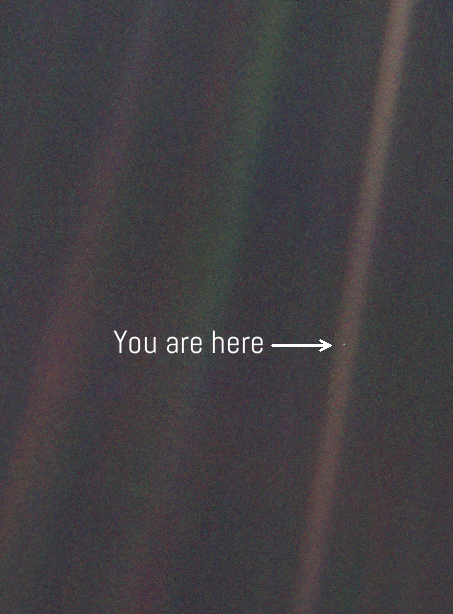

The Pale Blue Dot

Image of our homeworld taken from a distance of 6 billion kilometres away (approximately, 40.4 AU, or 5.5 light-hours), by Voyager 1, on 14 Feb 1990.For context, at this location, Voyager was farther than the average orbital distance of Pluto.

This is what Carl Sagan had to say about the image:

Look again at that dot. That's here. That's home. That's us. On it everyone you love, everyone you know, everyone you ever heard of, every human being who ever was, lived out their lives. The aggregate of our joy and suffering, thousands of confident religions, ideologies, and economic doctrines, every hunter and forager, every hero and coward, every creator and destroyer of civilization, every king and peasant, every young couple in love, every mother and father, hopeful child, inventor and explorer, every teacher of morals, every corrupt politician, every "superstar," every "supreme leader," every saint and sinner in the history of our species lived there--on a mote of dust suspended in a sunbeam.

The Earth is a very small stage in a vast cosmic arena. Think of the rivers of blood spilled by all those generals and emperors so that, in glory and triumph, they could become the momentary masters of a fraction of a dot. Think of the endless cruelties visited by the inhabitants of one corner of this pixel on the scarcely distinguishable inhabitants of some other corner, how frequent their misunderstandings, how eager they are to kill one another, how fervent their hatreds.

Our posturings, our imagined self-importance, the delusion that we have some privileged position in the Universe, are challenged by this point of pale light. Our planet is a lonely speck in the great enveloping cosmic dark. In our obscurity, in all this vastness, there is no hint that help will come from elsewhere to save us from ourselves.

The Earth is the only world known so far to harbor life. There is nowhere else, at least in the near future, to which our species could migrate. Visit, yes. Settle, not yet. Like it or not, for the moment the Earth is where we make our stand.

It has been said that astronomy is a humbling and character-building experience. There is perhaps no better demonstration of the folly of human conceits than this distant image of our tiny world. To me, it underscores our responsibility to deal more kindly with one another, and to preserve and cherish the pale blue dot, the only home we've ever known.

Both the image and the quote were sourced from Wikipedia. I added the arrow to point out the location of the pale blue dot. Yes, it's that small. In fact, according to NASA, it is less than a pixel.

Comments - Edit - Delete

How to handle bug report

I recently tested the Go language (a language invented by a star-studded team, including the original inventor of C language and Unix), however, it didn't go smoothly.I encountered a problem: I cannot compile a simple hello world program. It gave out a cryptic error message which search engines of the world cannot find.

Concerned, I raised a bug report. Mind you, it's not a support request, not a question. It's a __BUG REPORT__.

I received two kind of responses.

(1) The first one was short and sweet, and closed the ticket immediately, basically telling me not to ask question there.

But I wasn't asking a question. I was reporting what I thought to be a BUG!

This very unhelpful response was a big turn off. How would you encourage people to use your product if you ignore bug reports on your public bug tracker?

I quickly replied that with a piece of my mind.

My retort attracted a second response.

(2) This second response was a lot more helpful. It gently informed me that this was probably the problem of my own making; it linked to another ticket which had similar symptoms to the one I reported, and showed me how my issue could be caused by the same problem as described in the other ticket.

This response motivated me to do more investigation, and it turned out that the problem was indeed on my side. Hence, I replied in kind and moved on, after thanking the second responder.

No cookie for correctly guessing which one wins another customer (if this were a commercial project).

______________

So what's the outtake here?

With response (2): I learn that Go uses files with the name of .a that aren't libraries, and therefore should not be stripped.

But more importantly, others will learn the same thing (once the issue is indexed by search engines, next time someone else tries looking it up, they won't find empty results).

And finally, perhaps, just perhaps, the Go developers themselves will learn that adopting a file extension (.a) that already has an existing, different meaning, is probably not the best idea.

What do we learn from response (1)? Go away, and Go use another language.

___________

Sadly, attitude like (1) is very common on the IT world - especially in the commercial world. (I know Go isn't commercial, but I'm willing to bet that most of its core developers and support persons are employees).

Read the whole saga yourself: https://github.com/golang/go/issues/50509

Comments - Edit - Delete

Xdialog ported to GTK+ 3

Xdialog is a popular program to create a simple GUI for shell scripts. It is easy to use and implements the most commonly used GUI elements (e.g. input boxes, message boxes, etc). It was one of the earliest program of its kind.Sure, there are tons of similar program like this nowadays, some with even more powerful features - kdialog, zenity, yad, and I'm sure there are a lot more ... but Xdialog has two things going for it.

1. It's available almost anywhere.

2. It's command line interface (the parameters you pass to it, to get the GUI you want), is stable and unchanged in the last 20 years.

Any program you write with Xdialog would most probably work, while the same thing can't be easily said for the others.

There is only one problem. Xdialog is old, and for the longest time, has always been available only with those with GTK+ 2. GTK+2 has been end-of-life for about a decade now, although it is still popular.

GTK+ 2 replacement is GTK+ 3, so it's a reasonable migration path (as opposed to Qt for example, which requires a total re-write).

So far, I have not found a port of Xdialog to GTK+ 3, so I decided to start my own. The motivation is simple: so that in the future when we move to GTK+ 2-less future (only pure GTK+ 3), existing shell scripts that use Xdialog still works (we have a ton of those).

The result is here: http://chiselapp.com/user/jamesbond/repository/xdialog/index

The code in the repository compiles with both GTK+ 2 and GTK+ 3 (the original Xdialog compiles with GTK+ 1 and GTK+ 2). Login as anonymous in order to download the tarball. The port retains almost all of the features of the original Xdialog (except "unavailable" status are not available for radiolist/checklist/buidlist).

PS: While in the process of porting it, I found that somebody already did that here: https://github.com/wdlkmpx/Xdialog. I ended up using borrowing some of the codes from there to speed up the porting process, although the final code that goes into my repository is different wdlkmpx's. Another thing which is different is that I tried to retain all the existing functionalities, while wdlkmpx's port has dropped some of the lesser used features (which he documented in the his version of the Xdialog's manual).

Comments - Edit - Delete

The state of FOSS today

Somebody recently propped up Okular.It's the best PDF viewer, they say. You can view a lot of other stuff, not only PDF, but also stuff like XPS, Djvu, CBR, and many others. And it has annotation ability. And form-filling. And a lot of other nice stuff. Supposedly.

Wow. Nice. I already have a working and nice PDF viewer (Evince and qpdfviewer), but this Okular sounds so much better! I've really got to see it!

So what's the next logical stop? Try it, of course.

So I head to the website, and then on to the download page.

And immediately I see that we have problem, Houston.

There is an option to download it from the KDE Software Centre, since, well, Okular is a sub-project of KDE. There is no surprise here. It requires KDE to run, at least, KDE libraries.

But I don't have KDE.

And I can't install KDE from my repository either!

Why? Oh, it's only because I'm running my own build-from-source Linux (=Fatdog64), so I won't have KDE in my repository until I build KDE myself. But KDE is a big project, building the whole thing just to __try__ to use a PDF viewer sounds ... silly.

But that's not a problem in 2021, right?

Everybody delivers their programs in AppImage.

Well, no.

At least not this Okular.

Well no problem, just use Flatpak! Okular supports Flatpak!

Sorry, no cigar either.

Flatpak isn't just a packaging mechanism, it's an entire ecosystem.

The host OS needs to support Flatpak in the first place, before it can be used.

Fatdog64 doesn't have Flatpak support, and I'm not about to add it either (because it requires another bajillion dependencies to be built) - certainly, not for the sole purpose of testing a PDF viewer.

So what else can I do?

I see that Okular provides Windows binaries.

But I don't run Windows!

Yeah, but that's what "wine" is for, right?

So, downloaded said Windows binary, launched it with wine, and only then I got to see the wonderful PDF viewer. No amount of words can explain my jubilation, finally.

It gives me such a warm feeling, that me, someone who runs a FOSS operating system, has a such difficulty to run another FOSS software on it. Instead, I have to resort to using a binary meant for closed-source OS in order to run said FOSS software on a FOSS OS.

Merry Christmas everyone.

PS1: if you're wondering what's the point of this post: if the Okular team can spend the effort to make a Windows binary (with all the dependencies compiled in), why can't they do the same thing for other Linux users, in AppImage format?

By all means, pander to Windows users if you have to - but remember where you came from, would you? Nobody cares for your stuff in the Windows world: Adobe makes the most comprehensive PDF tools (viewer, editor, whatever have you) in the entire Windows universe. Only folks in the FOSS world do, and you're not making it easy for them.

PS2: Of, and if you're an AppImage packager, I have a message for you too.

Please, please, __resist__ the temptation to build your stuff on systems with the latest glibc, especially the one released last month. It makes said AppImage not runnable with people who happen to have OS with glibc from, say, 3 years ago, which is not that long ago.

Remember that the whole point of AppImage is to make it distro-independent, and this includes older distro, not only those relased in 2021 ?

Even a project as complex as VirtualBox can run on truly ancient glibc, because, they realise that backward-compatibility is important.

Yeah. Thanks for listening.

Comments - Edit - Delete

Cowboy Bebop Netflix Live Action 2021

"The Abolition of Man" was a heavy read, and its consequences weighed even heavier on my mind, so I thought I'd take a break and do something fun, like watching something light and entertaining.Netflix launched the live-action adaptation Cowboy Bebop series on November 19. That should be good, right? (despite many previous dire warnings ...)

If you don't know what Cowboy Bebop is, you probably don't want to read the rest of this long post anyway. But if you still want to know, it is an iconic anime from the turn of the previous millenium.

If you don't even know what anime is ... well, I think you should really leave now, unless you really want to go down the rabbit hole. But again, if you really want to know, "anime" is the Japanese word for "animation", that is, what we in the western worlds call as "animated movie", or "cartoon movies".

For the benefit of those who really don't know (who I am kidding ... if you get this far, you must be familiar with it, otherwise, why keep reading a very long post containing my silly opinion on a silly cartoon show. There are more important things to take care in the world ...), "Cowboy Bebop" is the story of a group of futuristic bounty hunters, living in the space ship called the "Bebop". ("cowboy" being the in-universe derogatory term for "bounty hunters", so the title could roughly be translated as "Bounty hunters who lived in a space-ship named the "Bebop"). There are five major characters - Spike Spiegel, Jet Black, Faye Valentine (the three are the "cowboys"), Ed (which is a girl), and Ein (which is a dog).

What's so interesting about bounty hunters anyway? Well, there is a reason why this show is iconic. You really have to watch and decide it for yourself. People have different tastes and preferences. Some people say it's iconic because it is the first anime that uses jazz music (but that's not true). Some people says because it's the animation quality (which isn't bad, but there are other anime that have good animation quality either), etc. I was skeptical before I watched myself more than a decade ago, but after I did, I agreed that this was overall a good show. (If you have Netflix, the anime version of the Cowboy Bebop is there too, all the 26 episodes of it. There is also a movie which isn't on Netflix, but you don't miss much if you don't watch it, although I think the opening song of the movie is cool.)

OVERVIEW

--------

Now, I'm here to talk about the live-action adaptation. Many youtubers and podcasters have commented on this, and usually I don't bother to comment on things that people have already commented - but I will make an exception here. I will have to emphasise that my opinion is my own; if they sound similar to what others have said, it's probably because we all share similar sentiment.

There is a meme that any Netflix adaptation ends up turning into trash. I don't know how true is that, I don't watch Netflix that much (there are more important things to do in life other than spending all of your free time watching other people's imagination), but I will say that in the case of Cowboy Bebop, they do a very bad job on it.

Season 1 was released with 10 episodes in it. I watched the first episode and my stomach churned. But I might be biased, so I gave it another chance and watched the second episode. It made me want to throw up, but, hey, third time's lucky right? After watching the third episode I think I have torturned myself enough. To top it off, I happened to watch a video clip of the last few minutes of the episode 10. When I watched it, I thought it was a joke made by someone who hated Netflix (or the show, or both). I went back to Netflix to check it ... lo and behold, that extremely tasteless cliffhanger __was__ there. It's so bad, even if you don't know anything the show, I __guarantee__ you that you will be turned off too).

A lot of the opinions so far have talked about the actors who played the adaptation, about the various race-swap and gender-swap (seems to be a feature of any movie in the last few years or sos). I don't want to talk about these, I think those points have been well rehearsed everywhere else. I don't necessarily agree or disgree with these opinions; but today that's not what I want to talk about.

I'm a writer, so my point is focused more on the story, the plot, and the characters.

To start off, this thing is supposedly a "live-action adaptation". It's not a reboot. It's not a remake. It's not re-imagination. It is an "adaptation". And yes, sure, adaptation means you have the freedom to change some of the details, insert new stories or previously unexplained back stories, etc. But it has to be consistent; at least, it has to be consistent with what have been established before (otherwise, it's really different show, isn't it?)

And lack of this consistency is why it sucks and sucks so badly.

THE CHARACTER

-------------

I will start with the bold conclusion that just by looking at the characters only, the live-adaptation of Cowboy Bebop is __not__ Cowboy Bebop. Or, equivalently, it is Cowboy Bebop in name-only. Yes, they have characters with the same name, these characters live in the same ship, the same universe, supposedly - but __everything else__ is different.

Let's start with Spike.

The anime Spike isn't the same as the live-action Spike. They don't share the same principles, they don't behave in the same way, they don't interact with others in the same way. They have different values, they care about different things.

There so many differences, but I will mention only one thing which is enough to spoil everything.

* The anime Spike is a man who doesn't care whether he lives for the next day or not.

* The live-action Spike pretends to not care whether he lives or not, but in reality, he really does __not__ want to die.

The difference seems subtle, it's not. The anime Spike is a man who has nothing else to lose. A lot of the (careless) actions in anime Spike can be attributed to his attitude to life; which couldn't be explained otherwise.

The live action Spike, however, is the opposite. He really cares about living and continue living. So why does he behaves so carelessly either? Because he's a hero? Because he's kind-hearted? Because he's arrogant? Because he's a jerk? Because he's just careless? Pick any reason you want - you will still find that this is not the anime Spike in the end.

How about Jet?

In the anime, Jet was like a big brother to Spike. He has his own share of bitter past, and like Spike, he had resigned to it and just live day by day. Being older, he is a tad wiser, and knowing Spike's past, he acted more than just a friend and a parter, he acted as a sort of surrogate big brother to Spike. Of course, he gets annoyed from time to time - but he didn't pick up fights or whiny for the sake of being whiny. He was trying to turn Spike into a better man.

The live-action Jet, however, was just a whiny partner. He was whiny, and complained too much, just for the sake of being whiny and irritating. I understand the writers' intention to intensify the "sarcastic banter" between the two, but I think they dialled it way way too much, to the point that Jet had become an annoying character. Really with the amount of animosity that Jet showed towards live-action Spike, I wonder why he didn't bother to kick him out altogether already (the "Bebop" ships belonged to him anyway).

I mean, I wonder why the live-action Jet took the live-action Spike as a partner to begin with. He didn't know Spike's past (Spike's lying to him, basically, that's just another one trait that differentiates anime Spike and live-action Spike), but still, if you're an ex-policement turned bounty hunter (with potentially lots of enemies), you __really__ want to check that your future live-in partner wouldn't want to slice your throat when you are sleeping, don't you? Sheesh.

As for Faye - I can't say much about Faye. I only saw her in the first episode, and she didn't get much airtime there. Not saying I like it, or agree with her acting or writing; but I've just not seen enough to make a decision.

But surprisingly, I have seen Vicious in the live-action, and too much of that, for that matter, in just 3 episodes.

In the anime, Vicious was not introduced until a bit later, and even then, when introducted, he was not introduced as anything special, other than being a person from Spike's murky past. Only much later it was revealed that in addition to being a highly skilled fighter, he is also a masterful strategist; ambitious and cold as a block of ice. It is easy to imagine that a person of this quality can rise through the rank to become a high ranking official of the mob. He was Spike's equal (or copy) in almost everything, except that Spike had left the dark side and he chose not to. That's why he was such a threatening archenemy: because they were equals.

The live-action Vicious is really a parody of the anime Vicious, really. Firstly, for a supposedly important archenemy, you reveal him in the first episode, with his names, his face and whatnot. That's a real grand entrance, boys and girls. First episode! Secondly, and most importantly, you will quickly learn that the most prized trait of this "grand enemy" is his nastiness. Other than that, he's somebody an easy-to-anger, greedy, careless, incompetent petty thug who likes to claim how "good" he is. He's so weak that Spike could easily kill him anytime he wanted. And then you make the entire season's plot to be the conflict between these two guys? Come on. I can't even reasonably think how the live-action Vicious earns his position in the mob.

THE PLOT

--------

And then we have the plot. The anime was a series, not a serial. You can watch any episodes in any order, apart from two parters and "deciding episodes" (where a member joined or left the Bebop), and not missing anything.

The live-action, however, made it obvious that it was a serial. You have to watch all of them, and __in order__, or you'll miss the plot, literally.

But that's not the point of contention. It's okay to "adapt" a series into a serial, provided that you do it right. Provided you can capture the spirit of the show. As I said above, you don't have to keep all of the original materials. You can keep some, and insert other new materials - as long as you remain consistent to what show is all about.

The main plot of the anime is how three broken people, people who have no hope for the future because of broken their past, continue to stay alive as they slowly learn to overcome their brokenness and learn to look forward to the future, because of the odd kind of friendship that formed in the shared events that they experience together.

The main plot of season 1 (based on what I've seen on the 3 episodes, and based on the one-sentence plot summary provided by Netflix itself), seems to be the on-going conflict between Spike and Vicious.

Of so many plots they could use, this one - for me at least - is the least interesting plot there is. The live-action shows Vicious as early as the first episodes, and it tracks what he does on almost every episode (at least on the episodes that I watched). At 3 episodes, I still haven't formed any bonding with the characters (Spike or Jet), and I couldn't care less about their supposedly "archenemy". Archenemies are only important once I've emotionally invested enough with the characters; but this certainly don't happen in episode 1, 2 or 3.

Okay, there is conflict between Spike and Vicious in the anime too, but it __isn't__ part of the main plot. The open conflict is only shown in two episodes - the episode which introduced Vicious, and an episode where the two have the final showdown. For the most part, they didn't care of each other, unless there was some shared interest (or accidentally crossed paths). Otherwise, Spike couldn't care less about Vicious, and Vicious wasn't obsessed about murdering Spike either. So why elevate this highly unimportant sub-plot to be the __highlight__ of the show?

I have enough of watching shows about two guys with personal conflict having a go with each other. It simply doesn't click for me; it's __not__ entertaining. Firstly, as I said, I don't care about the conflict if I don't care about the characters. Secondly, I don't have to watch movies for that; the newspaper writes about this kind of stuff everyday.

THE SHOW

--------

Then the show itself. Being a show about bounty hunting, there are bound to be fights, shootings, explosions, etc. But the anime make it clear that it was not an __action__ show. Every scene was there for a reason. Fightings, for example, is not there for the sake of showing the figthing, but also to show the (sometimes hard) choices that the character have to take during he fights (or not fight).

In the live-action, the fighting is there just for the sake of the fighting. Not saying the fighting scene is bad in itself, but it really felt like a generic fight scene without any emotion in it, no connection to the story (other than the sarcastic remarks - something which was used aesthetically in the anime, but way too much in the live-action it became so farcical and predictably boring). If I want to watch an action movie with a lot of fights, there are much better shows.

In the anime, the fighting is there to advance the plot. In the live-action, the fighting is there for the sake of showing off the fights.

And then the constant bickering between Spike and Jet. In the anime, they don't bicker. They argue, but not all the time. Most of the time they are in (silent) agreement. When there are serious problems, they don't just bicker. Things happen. Spike left Bebop temporarily because of a big argument with Jet. That's how adults behave.

In the live-action, they constantly bicker with each other. It's really unbearable listening to two grown-up men who are supposed to be friend and partners constantly being sarcastic to each other. As I said earlier, I find it extremely odd why Jet doesn't kick Spike out of his ship already; and how Spike could keep up with Jet's whining.

It feels funny on how they try to bring more "realism" into the live-action adaption, and tries to "explore" the "depth of the emotion" encountered by the characters by amplifying their edgy traits, but only succeeding to make the live-action character as a parody of the anime's version of them.

Oh, and one last thing (ending of Episode 1 - you can compare the live-action and anime version): In space, nobody can hear you when you scream. Or plead. Don't forget that.

CONCLUSION

----------

I am not the only one who complain about it. I watched the original Cowboy Bebop anime back in 2003 or 2004, and I have re-watched the series twice in the intervening years. On the last re-watch, my son watched with me as well.

We both watched the live-action; and we more or less came to the same conclusion. In fact, he told me that he specifically doesn't want to watch any further, as the live-action __ruins__ his experience. And my son is in the millenial generation, supposedly the target audience for this kind of show.

This show is Cowboy Bebop in name only; but otherwise it's not even close to the anime.

Enjoy the show if you like it, but for me, the show ends at episode 3. The whole thing just looks like and feels like a cheap comedy about bickering partners and a soul-less generic fighting action show. The spirit that makes "Cowboy Bebop" iconic is simply missing in the live-action adaptation. I would rather use my free time to do other things (or watch better shows, perhaps, a repeat of the Cowboy Bebop anime).

Comments - Edit - Delete

The Abolition of Man

I've just finished reading it, "The Abolition of Man", by C.S. Lewis. At about 81 pages (excluding the generous appendix and notes), it is a very worthwhile investment of your time.If you think that "1984" is prophetic, wait until you read this book.

If you espouse the idea of The Great Filter - you might finally have a glimpse of what it could be.

Comments - Edit - Delete

More apologies ...

If you apologise without admitting you're wrong, does it still count as apology?If you apologise without promising you won't do it again, does it still count as apology?

If you apologise without reparations, does it still count as apology?

If you apologise on behalf of someone else, while that someone else clearly is neither sorry nor repentant, does your apology even have meaning?

Comments - Edit - Delete

Apologies, and donations

If you are forced to apologise, does it still count as an apology?If you are forced to make a donation, does it still count as a donation?

If you are forced to be kind, does it mean that you are a kind person?

Is the action more important than the motive? Or is the motive more important than the action?

Comments - Edit - Delete